ROS for Beginners: How to Learn ROS

Now is the moment to start learning ROS.

We find many people that are new to ROS lack the basic skills necessary to start programming in ROS. That is why we have included a section of prerequisites here that you must meet before trying to get into ROS.

PREREQUISITES

You need to know in advance how to program in C++ or Python. Also, you need to be comfortable using the Linux shell. Those two are mandatory! If you still don’t know those two, I recommend you start learning how the Linux shell works and then move on to learning how to code in Python. If you don’t know C++, then do not worry about it now. Just learn Python and the Linux shell. We recommend the following free online courses:

As I already mentioned, ROS can be programmed with C++ or Python. However, if you don’t know C++ very well, do not try to get into ROS with C++. If that is your situation, please learn ROS with Python. Of course, you can start learning C++ now because C++ is the language used in the robotics industry, and you will need to make the transition from ROS Python to ROS C++ later. But your initial learning of ROS should be done by programming in Python. I know you are going to think that you can handle it and take both things at the same time. I do not agree at all. Bad decision. Good luck. Final comment: bindings for other languages, like Prolog, Lisp, Nodejs, and R, also exist. Find a complete list of currently supported languages here. I do not recommend for you to learn ROS with any of those languages though. ROS is complicated enough withuot having to complicate it further with experimental languages. Also, you will have plenty of time to practice with those once you know ROS with Python. [irp posts=”12071″ name=”Learn ROS with C++ or Python?”]

LEARNING ROS

If you have the basics of programming in any of those languages and of the use of the shell, the next step is to learn ROS. In order to do that, you have 5 methods to choose from.

Want to Master ROS and Launch Your Robotics Career?

We introduce you to the Robotics Developer Masterclass—your complete guide. This 6-month program will help you master robotics development from scratch and Get You 100% Job-ready to work at leading robotics companies.

Five methods to learn ROS

1- The official tutorials: ROS wiki

The official ROS tutorial website provided by Open Robotics, that is, the organization that builds and maintains ROS, is very comprehensive and it is available in multiple languages. It includes details for ROS installation, how-tos, documentation of ROS, etc. and it’s completely free. Just follow the tutorials provided on the ROS Wiki page and get started. This type of tutorial belongs to the traditional academic learning materials. They start by describing concepts one by one, following a well-defined hierarchy. It’s good material, but easy to get lost while reading, and it takes time to grasp the core spirit of ROS.

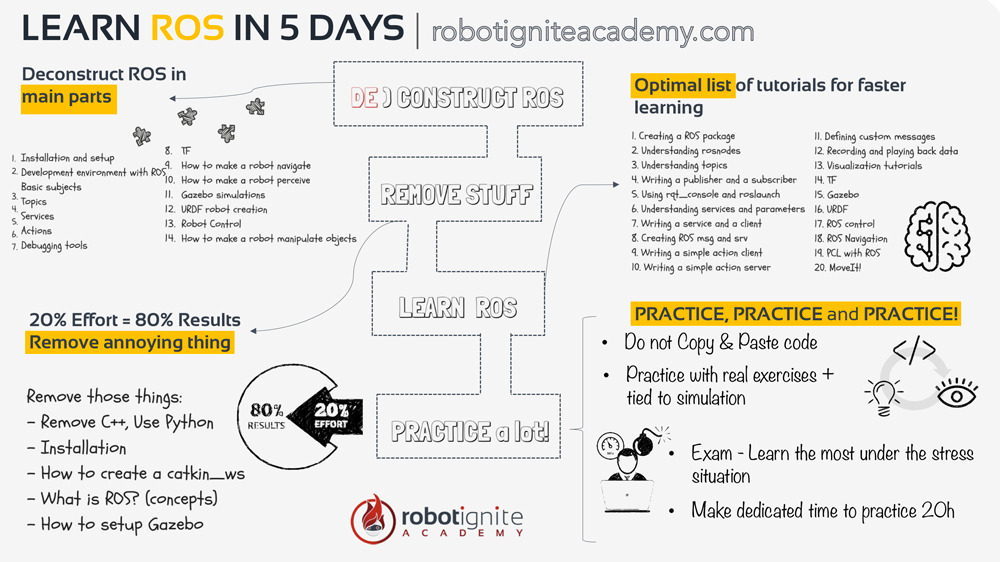

There is a long list of tutorials there. The list is so big that it can be overwhelming. If you decide to use this method to learn ROS, then we recommend that you follow the order of the tutorials below for optimal learning:

- Navigating the ROS file system

- Creating a ROS package

- Understanding topics

- Writing publishers and subscribers

- Examining publishers and subscribers

- Writing service client

- Examining service client

- Creating Msg and Srv

- Understanding service params

- Defining custom messages

- Recording and playing back data

- ROS TF

- ROS URDF

- ROS Control

- ROS Navigation

2- Video ROS tutorials

Video tutorials provide a unique presentation that shows how programs are created and run, in a practical way. It allows you to learn how to carry a ROS project from the professional or instructor, which alleviates a beginner’s fear of starting to learn ROS, to a certain degree. There are plenty of ROS tutorials on Youtube. Just search for “ROS tutorials“ and select among all the videos shown.

But there is a drawback, which is that anyone can create a video. There is no requirement for any sort of qualification to publish this content, and therefore, credibility might be shifty. Also, it is difficult to find an order to the videos, so as to provide a full learning path. We only recommend using this method when you want to learn about a very specific subject of ROS. One of the ROS video tutorial courses provided by Dr. Anis Koubaa from Prince Sultan University would be a great starting point for learning ROS. The course combines a guided tutorial, different examples, and exercises with increasing levels of difficulty along with the use of an autonomous robot. Another of the great resources is our YouTube channel. Sorry to mention it like that, but it is true, based on the comments people leave for us! On our channel, we teach about ROS basics, ROS2 basics, Gazebo basics, ROS Q&A, and even real robot projects with ROS. Please have a look at it and let us know if you actually find it useful or not. If you are looking for an answer to a specific ROS question, like How to read laserscan data? How to move a robot to a certain point? or ROS mapping…etc., check out our weekly ROS Q&A series.

3- ROS face-to-face training

Face-to-face instructional courses are the traditional way of teaching. They builds strong foundations of ROS into students. ROS training is usually a short course, which requires you to focus on learning ROS in a particular environment and period of time. There is interaction with instructors and colleagues. which allows you to get feedback directly. Under the guidance and supervision of instructors, it definitely encourages a better result. The following are some of the institutions that are holding live ROS training or summer courses on a regular basis:

- FH Aachen

- ROS China Summer School

- ROS Industrial Trainings: a series of trainings on ROS with specific emphasis on ROS Industrial. They have several trainings distributed along the three continents of Europe, America, and Asia

When we talk about ROS face-to-face training, we are not including the conferences, since the learning that you can get there is both little and specific. ROS conferences are more for staying up to date on the latest progress in the field, rather than learning ROS. So, it is supposed that you go to the conferences with at least the minimum ROS knowledge in order to take any profit from them. Some of the ROS conferences around the world:

When we talk about ROS face-to-face training, we are not including the conferences, since the learning that you can get there is both little and specific. ROS conferences are more for staying up to date on the latest progress in the field, rather than learning ROS. So, it is supposed that you go to the conferences with at least the minimum ROS knowledge in order to take any profit from them. Some of the ROS conferences around the world:

- The official ROSCON (2019)

- ROSCON France (2019)

- ROSCON Japan (2019)

- The ROS Developers Conference (2019) online

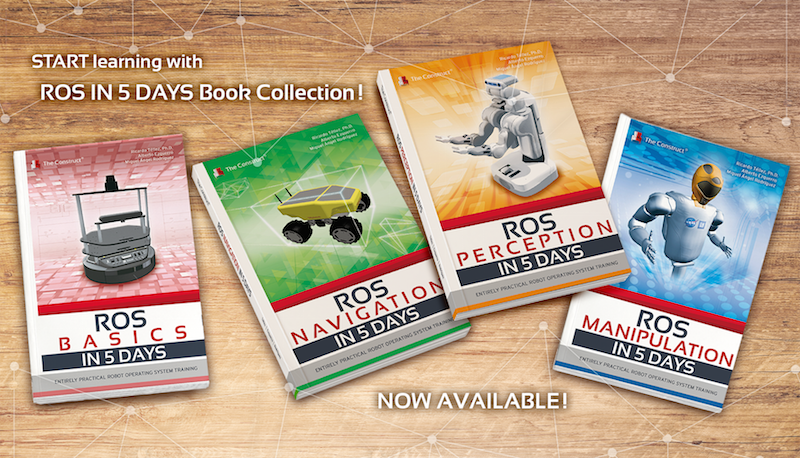

4- ROS Books

ROS books are published by experienced roboticists. They extract the essence of ROS and present a lot of practical examples. Books are good tools for learning ROS; however, they require a high level of self-discipline and concentration, so as to achieve the desired result. They are only as good as the person using them, and this depends on many factors. It allows for many distractions to easily affect your progress unless a strong sense of self-discipline can ensure that you pay full attention at all times. Here you have a list of ROS books so far:

- Programming Robots with ROS

- A Gentle Introduction to ROS

- Learning ROS for Robotics Programming – Second Edition

- ROS IN 5 DAYS Book Collection

- ROS Robotics By Example

- ROS Robotics Projects

- Mastering ROS for Robotics Programming – Second Edition

- Effective Robotics Programming with ROS – Third Edition

- Robot Operating System (ROS): The Complete Reference

- A Systematic Approach to Learning Robot Programming with ROS

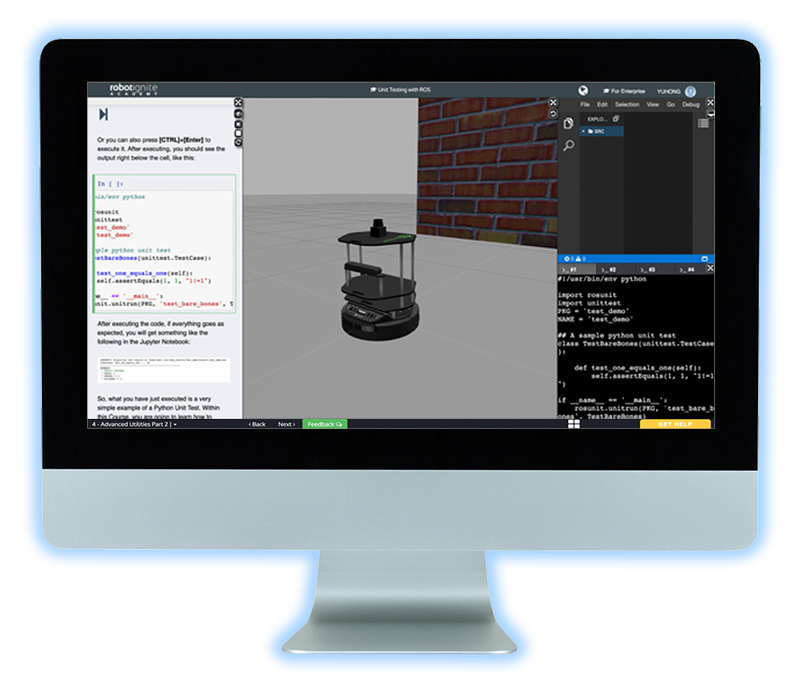

5- Integrated ROS learning platform – Robot Ignite Academy

We have created an integrated learning platform as a new way of learning ROS fast. Compared to other learning methods, it provides a more comprehensive learning mechanism. It is the easiest and fastest of all the methods. On the platform, you will learn ROS by practicing on simulated robots. You will follow a step-by-step tutorial and will program the robots while observing the program’s results on the robot simulation in real-time. The whole platform is integrated into a web page, so you don’t have to install anything. You just connect by using a web browser from any type of computer and start learning. Well, perhaps the only drawback is it is not free. You can try the platform with a free account at www.robotigniteacademy.com. We have to tell you that many universities around the world are using this academy to teach their students about ROS and robotics with ROS; for instance, University of Tokyo, University of Michigan, University of Sydney, University of Reims, University of Luxembourg, etc., as well as companies like Softbank, 3M, , and HP.

HOW TO ASK QUESTIONS

It will happen that while learning or creating your own ROS programs, you will have doubts. Things that don’t work as the documentation indicates, strange errors that appear even if you’ve checked that everything is correct… you will need to get support from the community in order to keep improving. For that purpose, you can use two different channels to ask questions:

- ROS answers: This is the official forum for questions about ROS. Use this forum to ask your technical questions about ROS. Also, you can use it for checking for previous answers to questions similar to yours. Finally, use it to help others by answering the questions that you already know.

- ROS discourse: This is the second official forum. However, this forum is not about answering technical questions. Instead, it is about announcing things related to ROS. If you have created a new package that interfaces ROS with neural networks, or you are holding an event about ROS, or you are releasing a product about ROS, this is the place to post it. Do not use this forum for technical questions, but for announcements or questions related to the ROS community.

Conclusion

Out of all the methods presented here, I’m going to recommend to you our online Robot Ignite Academy because it is by far the fastest and more comprehensive route for learning ROS. This is not something that I say, but what our customers say. Our online academy has a price, but it will considerably speed up your learning of ROS. In case you don’t want to spend money and you are not in a hurry, then the best option would be to follow the wiki tutorials. They are a little bit outdated and it is a slow path for learning ROS, but they definitely work. After all, that is the method the authors of this guide used to learn ROS. As a final recommendation, besides delivering ROS at the Master of Robotics of LaSalle University, Ricardo delivers a free live online class about ROS every Tuesday at 18:00 CEST/CET (the ROS Developers Live Class). We recommend for you to attend since it is 100% practice-based and deals with a new ROS subject every week.