Hi ROS Developers and welcome to the ROS Developers Podcast: the program, the podcast that gives you insights from the experts about how to program your robots with ROS.

I’m Ricardo Tellez, from The Construct.

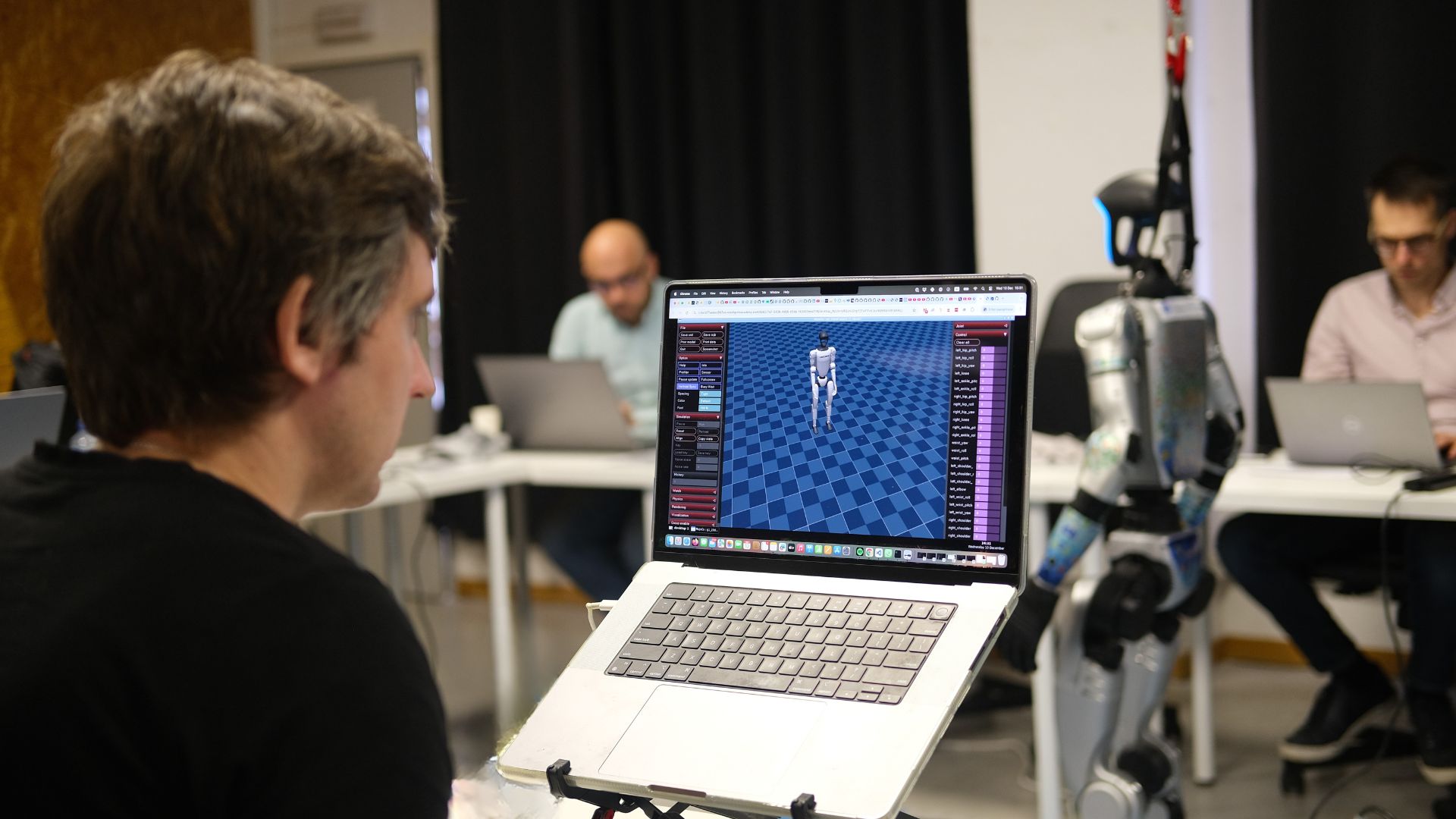

Today, we are at the ROS Industrial conference (Europe). I had the chance to meet Christian Henkel who presented at the conference the dockeROS library. DockeROS allows to dockerize ROS applications and sends them to remote robots. In this interview, Christian will explain to us how to do that, how to test the dockeROS and how do they differ from snaps.

Selected quote:

“I created dockeROS as an easy way to deploy ROS software on several robots, and to update it in several robots at once”

Christian Henkel

Related links:

- Follow Christian Henkel on LinkedIn.

- Follow Christian on Twitter

- Follow Christian Henkel on Research Gate.

- Github of DockeROS

- Slides of his presentation of dockeROS at ROS Industrial conference.

- Modular platform for the orchestration and management of thousands of AGVs

- Video of cloud navigation example.

- Some related research work from Christian Henkel:

- The Robot Ignite Academy, our online academy that teaches you ROS in 5 days

- The ROS Development Studio, our online platform to program ROS online only with a browser

- The ROS Developers Podcast full length tune.

Subscribe to the podcast using any of the following methods

- ROS Developers Podcast on iTunes

- ROS Developers Podcast on Stitcher

Or listen to it on Spotify:

Podcast: Play in new window | Download | Embed

SUBSCRIBE NOW: RSS

We’re deploying our application through Docker, too, but in one mega-sized container image that runs everything. It works great, but Christian’s smaller containers are probably much more wieldy than our 6GB beasts. Definitely +1 for mass deployments via Docker.

One point: He said that he thought the containerized processes may have access to the host kernel’s RT facilities, but had no experience, and said that Docker would introduce latency. Our controller hosts do run a PREEMPT_RT kernel with EtherCAT master, and we found the EtherCAT application will start complaining about missed datagrams when latency exceeds about 40uS or so. However, we’ve seen no difference in latency at all between the host and the container, and get about 6uS max jitter. I wonder if Christian was speaking from experience with VMs, where processes can’t take advantage of any host RT facilities.

The other big advantage we get from running in Docker vs.VMs is direct access to GPU hardware. Running rviz in a VM is painful, since all 3D rendering must happen on the CPU. Docker containers use cgroups, however, rather like `chroot` on steroids, and have direct access to the GPU. We can’t tell the difference between a containerized rviz and one running directly on the host.