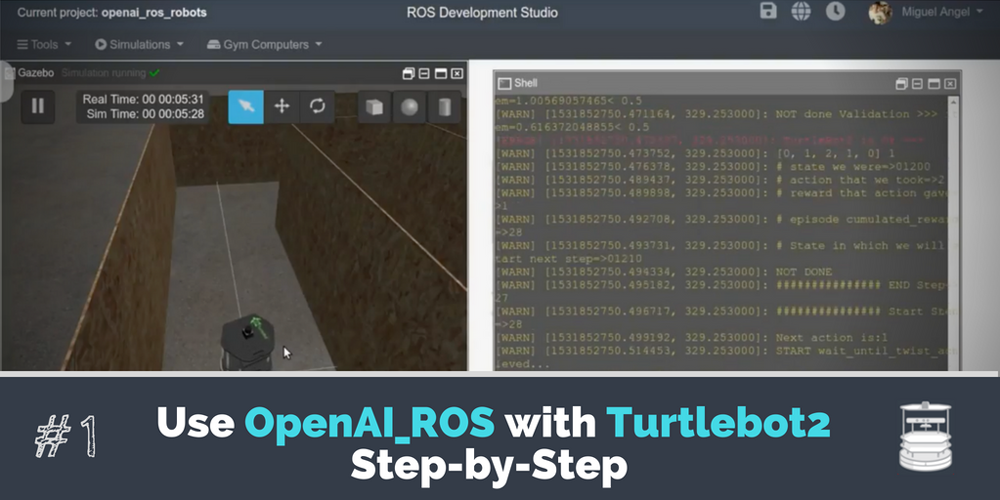

In this new video series, we are going to learn how to use the openai_ros package created by TheConstruct. You will learn to use it with all the robots that we give suport to. You will learn in this video divided into two parts, how to use openai_ros with Turtlebot2. You will learn how to setup everything to use our package and make it learn how to move around a maze with very little coding.

Here is the git of OpenAi_ros:

https://bitbucket.org/theconstructcore/openai_ros/src/master/

Here is the git to the code generated in this video and the next to come:

https://bitbucket.org/theconstructcore/openai_examples_projects/src/master/

Here a link to the OpenAI 101 course in RobotIgniteAcademy

Step 1. Create a project in ROS Development Studio(ROSDS)

ROSDS helps you follow our tutorial in a fast pace without dealing without setting up an environment locally. If you haven’t had an account yet, you can create a free account here.

Step 2. Clone the repository and create a package for the project

At first, we have to clone the repositories that we need for this project

cd ~/simulation_ws/src git clone https://bitbucket.org/theconstructcore/open_ai_gym_construct.git cd ~/catkin_ws/src git clone https://bitbucket.org/theconstructcore/openai_ros.git

You can launch the simulation environment from simulations->Select launch file->gym_construct-main.launch

Inside the open_ai ROS package, we need to specify turtlebot2_maze.py as our task.

We’ll start by creating the folders for the project

cd ~/catkin_ws/src mkdir openai_examples_projects/turtle2_open_ai_ros_example

Then create three folder config, launch, and scripts under the directory. Inside the launch folder, let’s create a script called start_training.launch with the following content

<launch>

<!-- This version uses the openai_ros environments -->

<rosparam command="load" file="$(find turtle2_openai_ros_example)/config/turtlebot2_openai_qlearn_params.yaml" />

<!-- Launch the training system -->

<node pkg="turtle2_openai_ros_example" name="turtlebot2_maze" type="start_qlearning.py" output="screen"/>

</launch>

This launch file will load the parameters first for the algorithm, you can define the parameter in a file called turtlebot2_openai_qlearn_params.yaml in the config folder with the following content

turtlebot2: #namespace

running_step: 0.04 # amount of time the control will be executed

pos_step: 0.016 # increment in position for each command

#qlearn parameters

alpha: 0.1

gamma: 0.7

epsilon: 0.9

epsilon_discount: 0.999

nepisodes: 500

nsteps: 10000

number_splits: 10 #set to change the number of state splits for the continuous problem and also the number of env_variable splits

running_step: 0.06 # Time for each step

wait_time: 0.1 # Time to wait in the reset phases

n_actions: 4 # We have 3 actions, Forwards,TurnLeft,TurnRight,Backwards

n_observations: 6 # We have 6 different observations

speed_step: 1.0 # Time to wait in the reset phases

linear_forward_speed: 0.5 # Spwwed for ging fowards

linear_turn_speed: 0.05 # Lienare speed when turning

angular_speed: 0.3 # Angular speed when turning Left or Right

init_linear_forward_speed: 0.0 # Initial linear speed in which we start each episode

init_linear_turn_speed: 0.0 # Initial angular speed in shich we start each episode

new_ranges: 5 # How many laser readings we jump in each observation reading, the bigger the less laser resolution

min_range: 0.5 # Minimum meters below wich we consider we have crashed

max_laser_value: 6 # Value considered Ok, no wall

min_laser_value: 0 # Value considered there is an obstacle or crashed

desired_pose:

x: 5.0

y: 0.0

z: 0.0

forwards_reward: 5 # Points Given to go forwards

turn_reward: 1 # Points Given to turn as action

end_episode_points: 200 # Points given when ending an episode

Then it will launch the node for qlearning, let’s create two files under the scripts folder. The first one called qlearn.py with the following content which is implemented by Victor Mayoral Vilches. We won’t go into detail here.

''' Q-learning approach for different RL problems as part of the basic series on reinforcement learning @ Inspired by https://gym.openai.com/evaluations/eval_kWknKOkPQ7izrixdhriurA @author: Victor Mayoral Vilches <victor@erlerobotics.com> ''' import random class QLearn: def __init__(self, actions, epsilon, alpha, gamma): self.q = {} self.epsilon = epsilon # exploration constant self.alpha = alpha # discount constant self.gamma = gamma # discount factor self.actions = actions def getQ(self, state, action): return self.q.get((state, action), 0.0) def learnQ(self, state, action, reward, value): ''' Q-learning: Q(s, a) += alpha * (reward(s,a) + max(Q(s') - Q(s,a)) ''' oldv = self.q.get((state, action), None) if oldv is None: self.q[(state, action)] = reward else: self.q[(state, action)] = oldv + self.alpha * (value - oldv) def chooseAction(self, state, return_q=False): q = [self.getQ(state, a) for a in self.actions] maxQ = max(q) if random.random() < self.epsilon: minQ = min(q); mag = max(abs(minQ), abs(maxQ)) # add random values to all the actions, recalculate maxQ q = [q[i] + random.random() * mag - .5 * mag for i in range(len(self.actions))] maxQ = max(q) count = q.count(maxQ) # In case there're several state-action max values # we select a random one among them if count > 1: best = [i for i in range(len(self.actions)) if q[i] == maxQ] i = random.choice(best) else: i = q.index(maxQ) action = self.actions[i] if return_q: # if they want it, give it! return action, q return action def learn(self, state1, action1, reward, state2): maxqnew = max([self.getQ(state2, a) for a in self.actions]) self.learnQ(state1, action1, reward, reward + self.gamma*maxqnew)

The next one is the training script called start_qlearning.py with the following content

#!/usr/bin/env python

import gym

import numpy

import time

import qlearn

from gym import wrappers

# ROS packages required

import rospy

import rospkg

# import our training environment

from openai_ros.turtlebot2 import turtlebot2_maze

if __name__ == '__main__':

rospy.init_node('turtlebot2_maze_qlearn', anonymous=True, log_level=rospy.WARN)

# Create the Gym environment

env = gym.make('TurtleBot2Maze-v0')

rospy.loginfo("Gym environment done")

# Set the logging system

rospack = rospkg.RosPack()

pkg_path = rospack.get_path('my_turtlebot2_maze')

outdir = pkg_path + '/training_results'

env = wrappers.Monitor(env, outdir, force=True)

rospy.loginfo("Monitor Wrapper started")

last_time_steps = numpy.ndarray(0)

# Loads parameters from the ROS param server

# Parameters are stored in a yaml file inside the config directory

# They are loaded at runtime by the launch file

Alpha = rospy.get_param("/turtlebot2/alpha")

Epsilon = rospy.get_param("/turtlebot2/epsilon")

Gamma = rospy.get_param("/turtlebot2/gamma")

epsilon_discount = rospy.get_param("/turtlebot2/epsilon_discount")

nepisodes = rospy.get_param("/turtlebot2/nepisodes")

nsteps = rospy.get_param("/turtlebot2/nsteps")

running_step = rospy.get_param("/turtlebot2/running_step")

# Initialises the algorithm that we are going to use for learning

qlearn = qlearn.QLearn(actions=range(env.action_space.n),

alpha=Alpha, gamma=Gamma, epsilon=Epsilon)

initial_epsilon = qlearn.epsilon

start_time = time.time()

highest_reward = 0

# Starts the main training loop: the one about the episodes to do

for x in range(nepisodes):

rospy.logdebug("############### START EPISODE=>" + str(x))

cumulated_reward = 0

done = False

if qlearn.epsilon > 0.05:

qlearn.epsilon *= epsilon_discount

# Initialize the environment and get first state of the robot

observation = env.reset()

state = ''.join(map(str, observation))

# Show on screen the actual situation of the robot

# env.render()

# for each episode, we test the robot for nsteps

for i in range(nsteps):

rospy.logwarn("############### Start Step=>" + str(i))

# Pick an action based on the current state

action = qlearn.chooseAction(state)

rospy.logwarn("Next action is:%d", action)

# Execute the action in the environment and get feedback

observation, reward, done, info = env.step(action)

rospy.logwarn(str(observation) + " " + str(reward))

cumulated_reward += reward

if highest_reward < cumulated_reward:

highest_reward = cumulated_reward

nextState = ''.join(map(str, observation))

# Make the algorithm learn based on the results

rospy.logwarn("# state we were=>" + str(state))

rospy.logwarn("# action that we took=>" + str(action))

rospy.logwarn("# reward that action gave=>" + str(reward))

rospy.logwarn("# episode cumulated_reward=>" + str(cumulated_reward))

rospy.logwarn("# State in which we will start next step=>" + str(nextState))

qlearn.learn(state, action, reward, nextState)

if not (done):

rospy.logwarn("NOT DONE")

state = nextState

else:

rospy.logwarn("DONE")

last_time_steps = numpy.append(last_time_steps, [int(i + 1)])

break

rospy.logwarn("############### END Step=>" + str(i))

#raw_input("Next Step...PRESS KEY")

# rospy.sleep(2.0)

m, s = divmod(int(time.time() - start_time), 60)

h, m = divmod(m, 60)

rospy.logerr(("EP: " + str(x + 1) + " - [alpha: " + str(round(qlearn.alpha, 2)) + " - gamma: " + str(

round(qlearn.gamma, 2)) + " - epsilon: " + str(round(qlearn.epsilon, 2)) + "] - Reward: " + str(

cumulated_reward) + " Time: %d:%02d:%02d" % (h, m, s)))

rospy.loginfo(("\n|" + str(nepisodes) + "|" + str(qlearn.alpha) + "|" + str(qlearn.gamma) + "|" + str(

initial_epsilon) + "*" + str(epsilon_discount) + "|" + str(highest_reward) + "| PICTURE |"))

l = last_time_steps.tolist()

l.sort()

# print("Parameters: a="+str)

rospy.loginfo("Overall score: {:0.2f}".format(last_time_steps.mean()))

rospy.loginfo("Best 100 score: {:0.2f}".format(reduce(lambda x, y: x + y, l[-100:]) / len(l[-100:])))

env.close()

Line 20 is the most important part of this script. It will register the training environment.

From line 52 to line 102 is the training process of the algorithm. For each timestep, the algorithm will decide the action to take based on the current state. After an action is taken. The observation is measured and the reward is calculated. The algorithm will keep learning to get the highest possible reward.

After that, you can compile the package and launch the training with the following command.

cd ~/catkin_ws catkin_make source devel/setup.bash roslaunch turtle2_openai_ros_example start_training.launch

You should see the turtlebot start to moving and reset if it touches the wall.

In this example, we use the environment defined by openai_ros package, but what if I want to do some different tasks? e.g. change the actions the robot can take?

Let’s create our own environment called my_turtlebot2_maze.py under the script folder with the following content

import rospy

import numpy

from gym import spaces

from openai_ros import turtlebot2_env

from gym.envs.registration import register

timestep_limit_per_episode = 10000 # Can be any Value

register(

id='MyTurtleBot2Maze-v0',

entry_point='my_turtlebot2_maze:MyTurtleBot2MazeEnv',

timestep_limit=timestep_limit_per_episode,

)

class MyTurtleBot2MazeEnv(turtlebot2_env.TurtleBot2Env):

def __init__(self):

"""

This Task Env is designed for having the TurtleBot2 in some kind of maze.

It will learn how to move around the maze without crashing.

"""

# Only variable needed to be set here

number_actions = rospy.get_param('/turtlebot2/n_actions')

self.action_space = spaces.Discrete(number_actions)

# We set the reward range, which is not compulsory but here we do it.

self.reward_range = (-numpy.inf, numpy.inf)

#number_observations = rospy.get_param('/turtlebot2/n_observations')

"""

We set the Observation space for the 6 observations

cube_observations = [

round(current_disk_roll_vel, 0),

round(y_distance, 1),

round(roll, 1),

round(pitch, 1),

round(y_linear_speed,1),

round(yaw, 1),

]

"""

# Actions and Observations

self.linear_forward_speed = rospy.get_param('/turtlebot2/linear_forward_speed')

self.linear_turn_speed = rospy.get_param('/turtlebot2/linear_turn_speed')

self.angular_speed = rospy.get_param('/turtlebot2/angular_speed')

self.init_linear_forward_speed = rospy.get_param('/turtlebot2/init_linear_forward_speed')

self.init_linear_turn_speed = rospy.get_param('/turtlebot2/init_linear_turn_speed')

self.new_ranges = rospy.get_param('/turtlebot2/new_ranges')

self.min_range = rospy.get_param('/turtlebot2/min_range')

self.max_laser_value = rospy.get_param('/turtlebot2/max_laser_value')

self.min_laser_value = rospy.get_param('/turtlebot2/min_laser_value')

# We create two arrays based on the binary values that will be assigned

# In the discretization method.

laser_scan = self._check_laser_scan_ready()

num_laser_readings = len(laser_scan.ranges)/self.new_ranges

high = numpy.full((num_laser_readings), self.max_laser_value)

low = numpy.full((num_laser_readings), self.min_laser_value)

# We only use two integers

self.observation_space = spaces.Box(low, high)

rospy.logdebug("ACTION SPACES TYPE===>"+str(self.action_space))

rospy.logdebug("OBSERVATION SPACES TYPE===>"+str(self.observation_space))

# Rewards

self.forwards_reward = rospy.get_param("/turtlebot2/forwards_reward")

self.turn_reward = rospy.get_param("/turtlebot2/turn_reward")

self.end_episode_points = rospy.get_param("/turtlebot2/end_episode_points")

self.cumulated_steps = 0.0

# Here we will add any init functions prior to starting the MyRobotEnv

super(MyTurtleBot2MazeEnv, self).__init__()

def _set_init_pose(self):

"""Sets the Robot in its init pose

"""

self.move_base( self.init_linear_forward_speed,

self.init_linear_turn_speed,

epsilon=0.05,

update_rate=10)

return True

def _init_env_variables(self):

"""

Inits variables needed to be initialised each time we reset at the start

of an episode.

:return:

"""

# For Info Purposes

self.cumulated_reward = 0.0

# Set to false Done, because its calculated asyncronously

self._episode_done = False

def _set_action(self, action):

"""

This set action will Set the linear and angular speed of the turtlebot2

based on the action number given.

:param action: The action integer that set s what movement to do next.

"""

rospy.logdebug("Start Set Action ==>"+str(action))

# We convert the actions to speed movements to send to the parent class CubeSingleDiskEnv

if action == 0: #FORWARD

linear_speed = self.linear_forward_speed

angular_speed = 0.0

self.last_action = "FORWARDS"

elif action == 1: #LEFT

linear_speed = self.linear_turn_speed

angular_speed = self.angular_speed

self.last_action = "TURN_LEFT"

elif action == 2: #RIGHT

linear_speed = self.linear_turn_speed

angular_speed = -1*self.angular_speed

self.last_action = "TURN_RIGHT"

elif action == 3: #RIGHT

linear_speed = -self.linear_turn_speed

angular_speed = 0.0

self.last_action = "BACKWARDS"

# We tell TurtleBot2 the linear and angular speed to set to execute

self.move_base(linear_speed, angular_speed, epsilon=0.05, update_rate=10)

rospy.logdebug("END Set Action ==>"+str(action))

def _get_obs(self):

"""

Here we define what sensor data defines our robots observations

To know which Variables we have acces to, we need to read the

TurtleBot2Env API DOCS

:return:

"""

rospy.logdebug("Start Get Observation ==>")

# We get the laser scan data

laser_scan = self.get_laser_scan()

discretized_observations = self.discretize_observation( laser_scan,

self.new_ranges

)

rospy.logdebug("Observations==>"+str(discretized_observations))

rospy.logdebug("END Get Observation ==>")

return discretized_observations

def _is_done(self, observations):

if self._episode_done:

rospy.logerr("TurtleBot2 is Too Close to wall==>")

else:

rospy.logerr("TurtleBot2 is Ok ==>")

return self._episode_done

def _compute_reward(self, observations, done):

if not done:

if self.last_action == "FORWARDS":

reward = self.forwards_reward

elif self.last_action == "BACKWARDS":

reward = -1*self.forwards_reward

else:

reward = self.turn_reward

else:

reward = -1*self.end_episode_points

rospy.logdebug("reward=" + str(reward))

self.cumulated_reward += reward

rospy.logdebug("Cumulated_reward=" + str(self.cumulated_reward))

self.cumulated_steps += 1

rospy.logdebug("Cumulated_steps=" + str(self.cumulated_steps))

return reward

# Internal TaskEnv Methods

def discretize_observation(self,data,new_ranges):

"""

Discards all the laser readings that are not multiple in index of new_ranges

value.

"""

self._episode_done = False

discretized_ranges = []

mod = len(data.ranges)/new_ranges

rospy.logdebug("data=" + str(data))

rospy.logwarn("new_ranges=" + str(new_ranges))

rospy.logwarn("mod=" + str(mod))

for i, item in enumerate(data.ranges):

if (i%mod==0):

if item == float ('Inf') or numpy.isinf(item):

discretized_ranges.append(self.max_laser_value)

elif numpy.isnan(item):

discretized_ranges.append(self.min_laser_value)

else:

discretized_ranges.append(int(item))

if (self.min_range > item > 0):

rospy.logerr("done Validation >>> item=" + str(item)+"< "+str(self.min_range))

self._episode_done = True

else:

rospy.logwarn("NOT done Validation >>> item=" + str(item)+"< "+str(self.min_range))

return discretized_ranges

Please notice the ID defined in line 10 is the one we are going to use

In line 15, you can see that the environment is inherited from the turtlebot2_env from the openai_ros package. By doing that, you don’t need to define how to move the robot or get the sensor data since the openai_ros package already defined it for you.

Then let’s set up a new training script and launch file. We’ll call the new training script my_start_qlearning_maze.py with same same content as start_qlearning.py but with the following changes

...

from gym import wrappers

# ROS packages required

import rospy

import rospkg

# import our training environment

import my_turtlebot2_maze

if __name__ == '__main__':

rospy.init_node('example_turtlebot2_maze_qlearn', anonymous=True, log_level=rospy.WARN)

# Create our own Gym environment

env = gym.make('MyTurtleBot2Maze-v0')

rospy.loginfo("Gym environment done")

...

Here we directly import the environment from the previous script and use the ID it defined. The last step is to create the start_training_maze.launch file under the launch folder with the following content.

<launch>

<!-- This version uses the openai_ros environments -->

<rosparam command="load" file="$(find turtle2_openai_ros_example)/config/turtlebot2_openai_qlearn_params.yaml" />

<!-- Launch the training system -->

<node pkg="turtle2_openai_ros_example" name="example_turtlebot2_maze_qlearn" type="my_start_qlearning_maze.py" output="screen"/>

</launch>

This launch file will launch the node which uses the last script for training.

You can define different action or reward as you want, but remember to also change the n_actions parameter in the turtlebot2_openai_qlearn_params.yaml because it is used by the openai gym.

Have fun!

If you want to learn more about OpenAI gym, please check our OpenAI Gym for Robotics 101 course. You’ll learn how to create environments and train robot for different task in detail.

Edit by: Tony Huang

0 Comments