[ROS Q&A] 111 – How to plan for a MoveIt named pose in Python. MoveIt ROS tutorial.

On this Movelt ROS tutorial video we show you how you can plan and execute a trajectory for a previously created named pose using Python code.

On this Movelt ROS tutorial video we show you how you can plan and execute a trajectory for a previously created named pose using Python code.

This is a quick guide on how to get your Raspberry Pi up and running to start your first steps into robotics with ROS (Kinetic).

This guide is a compilation of methods described by other people so please do check out the links if you need more detailed explanations.

In this guide you are going to learn how to:

You can find all the Bill of materials at the bottom of the post just in case you want the exact same setup.

So let’s get to it, shall we?

From now on, this post supposes that you have all the material used in this example. If not please refer to the Bill of Materials. So normally you will have already an OS installed in your starter kit MicroSD card with NOOBS. But we want to have the full Ubuntu experience, which will make installing ROS Kinetic much easier.

For this part, we will use an Ubuntu machine, but the process will be slightly different for Windows or Mac-OS.

Just download it from the official Ubuntu Page

We will use a graphical interface because of it just easier. Just type in your shell:

sudo apt-get install gnome-disk-utility

https://www.youtube.com/watch?v=V_6GNyL6Dac

Now just plug your Raspberry Pi to your outlet and to a monitor through HDMI and … There you have it!

Now that you have your Raspberry Pi with Ubuntu-Mate just type in a terminal in your Raspberry Pi ( just connect the HDMI cable to your closest monitor):

git clone https://github.com/goodtft/LCD-show.git chmod -R 755 LCD-show cd LCD-show/ sudo ./LCD5-show

general_raspberrypi_cam_lcd_ubuntu

Ok, you have now Ubuntu with a cute LCD screen. Now to install Full ROS Kinetic, with gazebo simulator, RVIZ and everything you need for robotics projects. Go to a terminal in your Raspberry Pi and type:

sudo sh -c 'echo "deb http://packages.ros.org/ros/ubuntu $(lsb_release -sc) main" > /etc/apt/sources.list.d/ros-latest.list' sudo apt-key adv --keyserver hkp://ha.pool.sks-keyservers.net:80 --recv-key 421C365BD9FF1F717815A3895523BAEEB01FA116 sudo apt-get update sudo apt-get install ros-kinetic-desktop-full sudo rosdep init rosdep update echo "source /opt/ros/kinetic/setup.bash" >> ~/.bashrc source ~/.bashrc sudo apt-get install python-rosinstall python-rosinstall-generator python-wstool build-essential mkdir -p ~/catkin_ws/src cd ~/catkin_ws/ catkin_make

And there you go, you have now a ROS Raspberry Pi Ready.

Now we are going to set our NightVision camera to be able to do all kinds of cool stuff like Object Recognition, Person Detection, AI Learning…

Type in your Raspberry Pi terminal:

sudo apt-get install libraspberrypi-dev sudo pip install picamera

Now open the file /boot/config.txt and add the following two lines at the bottom of the file:

start_x=1

gpu_mem=128

sudo reboot

import picamera

camera = picamera.PiCamera()

camera.capture('mytest_image.jpg')

camera.close()

But we need to publish our camera images into ROS, so that we can then use the thousands of ROS packages to do all the nice robotics stuff that we love!

For that we are going to use a ROS package created for this purpose: Publish Raspberry Pi Camera images into an image rostopic. Go to a terminal in your Raspberry Pi and type:

cd ~/catkin_ws/src git clone https://github.com/dganbold/raspicam_node.git cd ~/catkin_ws/ catkin_make --pkg raspicam_node

If the compilation went Ok, you now can use it. For this, you have to first start the node with all the services for the camera.

cd ~/catkin_ws/ source devel/setup.bash roslaunch raspicam_node camera_module_v2_640x480_30fps.launch

Then on another terminal, start the Camera service, which will activate the camera and start publishing. You can see that it worked because when started a red LED will light up on the camera.

rosservice call /raspicam_node/start_capture

camera_on

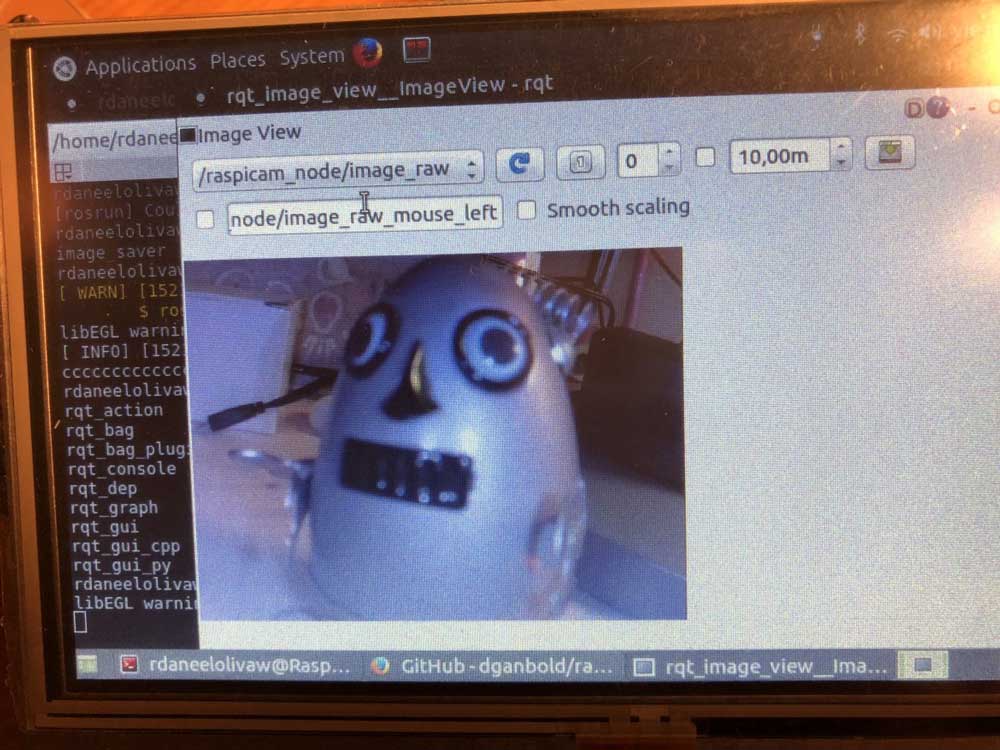

And now you are ready to view the images and use them in ROS. You can use rqt_image_view, for example, to connect to the image published in a topic and see it. here are two options to see the video stream:

rosrun image_view image_view image:=/raspicam_node/image_raw rosrun rqt_image_view rqt_image_view

rqt_image_view

Robot Ignite Academy

Well now that you are all set up…What next?

Well, you should learn how to use ROS Kinetic and harness all its power! Try this ROS in 5 days Course.

Object Recognition, Perception, Person Detection/Recognition, create a simulation of your future robot that will be controlled by your ROS Raspberry Pi…

The best place to do it FAST and EASY is in RobotIgniteAcademy. There you will learn this and much more.

ROS Development Studio (ROSDS)

Want to practice freely, try ROSDevelopment Studio, here you will be able to test all your programs first in simulation and when they are ready, deploy them into your ROS Raspberry Pi without any conversion.

Here you have a list of all the materials used for this tutorial of ROS Raspberry Pi:

HowToBurnUbuntuMate-in-MicroSD-Ubuntu

Navigation is one of the challenging tasks in robotics. It’s not a simple task. To reach a goal or follow a trajectory, the robot must know the environment using and localize itself through sensors and a map.

But when we have a robot that has this information already, it’s possible to start navigating, defining points to follow in order to reach a goal. That’s the point we are starting from in this post. Using a very popular ground robot, Turtlebot 2, we are going to perform a navigation task, or part of it.

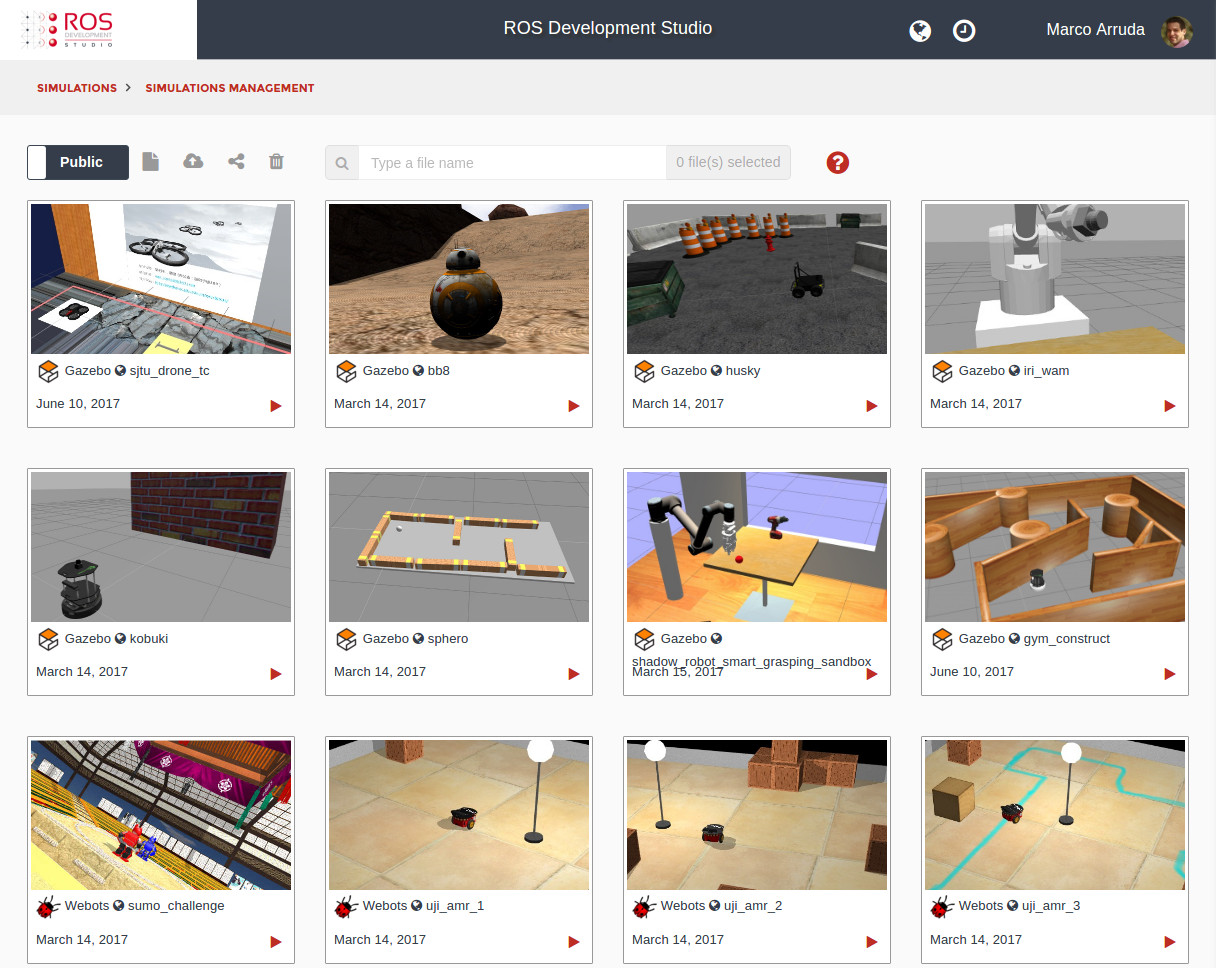

In order to program the robot, RDS (ROS Development Studio) is going to be used. Before starting, make sure you are logged in using your credentials and able to see the public simulation list (as below) and run the Kobuki gazebo simulation.

At this point, you should have the simulation running (image below). On the left side of the screen, you have a Jupyter Notebook with the basics and some instructions about the robot. On the center, the simulation. On the right, an IDE and a terminal. It’s possible to set each one in full screen mode or reorganize the screen, as you wish. Feel free to explore it!

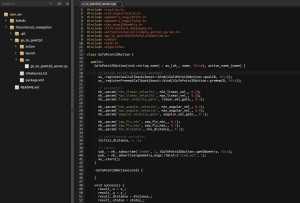

So, you have already noticed the simulation is running, the robot is ready and you might have send some velocity commands already. Now let’s start showing our Action Server that sends the velocity commands in order to the robot achieve a goal. First thing, clone this repository [https://marcoarruda@bitbucket.org/TheConstruct/theconstruct_navigation.git] to your workspace (yes, your cloud workspace, using RDS!). Done? Let’s explore it for a while. Open the file src/theconstruct_navigation/go_to_point2d/src/go_to_point2d_server.cpp. You must have something like this:

So, let’s start from the main function of the file. You can see there we are creating a node (ROS::init()) and a GoToPoint2DAction object. That’s the name of the class created at the beginning of the file. Once this variable is created, all methods and behaviors of the class will be working.

Now, taking a look inside the class we can see that there are some methods and attributes. The attributes are used only inside the class. The interface between the object and our ROS node are the methods, which are public.

When the object is instantiated, it registers some mandatory callbacks for the ActionLib library (goalCB, the one who receives the goal or points that we want to send the robot and preembCB, that allows us to interrupt the task). It’s also getting some parameters from the launch file. And finally, creating a publisher for the velocity topic and subscribing the odometry topic, which is used to localize the robot.

Let’s compile it! Using the terminal, enter into the directory catkin_ws (cd catkin_ws) and compile the workspace (catkin_make). It may take some minutes, because we are generating the message files. The action message is defined at the folder theconstruct_navigation/action/GoToPoint2D.action. You can explore there and see what it expects and delivers.

Finally, let’s run the action server. Use the launch file to set the parameters:

roslaunch go_to_point2d go_to_point2d_server.launch. Did the robot move? No? Great! The server is waiting for the messages, so it must not send any command until we create an action client and send the requests. First, let’s take a look in the launch file:

Notice that we have some parameters to define the limits of the robot operation. The first 3 sets the maximum and minimum linear velocity and a gain that is used to set the robot speed in a straight line, since it depends on the distance between the robot and the goal point.

The next 3 parameters set the same as the previous parameters, but for the angular velocity.

Finally the last 3 parameters are used to establish a tolerance for the robot. Well, the robot’s odometry and yaw measurement are not perfect, so we need to consider we’ll have some errors. The error cannot be too small, otherwise the robot will never get to its goal. If the error is too big, the robot will stop very far from the goal (it depends on the robot perception).

Now that we have the basic idea of how this package works, let’s use it! In order to create an action client and send a goal to the server, we are going to use the jupyter notebook and create a node in python. You can use the following code and see the robot going to the points:

Restart the notebook kernel before running it, because we have compiled a new package. Execute the cells, one by one, in the order and you’ll see the robot going to the point!

If you have any doubts about how to do it, please leave a comment. You can also check this video, where all the steps described in this post are done:

[ROS Q&A] How to test ROS algorithms using ROS Development Studio

Related ROS Answers Forum question: actionlib status update problem